June 02, 2011

Measures, Organizations and their Relationships

By: Christine H Morton, PhD | 0 Comments

Quality measures, transparency, quality improvement - these 'buzz words' are proliferating in the blogosphere, reflecting increased activity and interest around improving the quality of health care in the United States. How does maternity care fit into this picture? This blog post series contains three parts: Part 1 provided an introduction to the history of the general quality measure landscape. Today, in Part 2, we will deconstruct and demystify the alphabet soup of indicators, measures and organizations involved and explain their relationship to one another. Part 3 will review the current National Quality Forum (NQF) perinatal measures and discuss The Joint Commission (TJC) Perinatal Core Measure Set, describe how these measures are being used by various organizations and/or states, and discuss their limitations as well as their potential. We will conclude with suggestions on how maternity care advocates can engage with maternal quality improvement efforts on national and local levels.

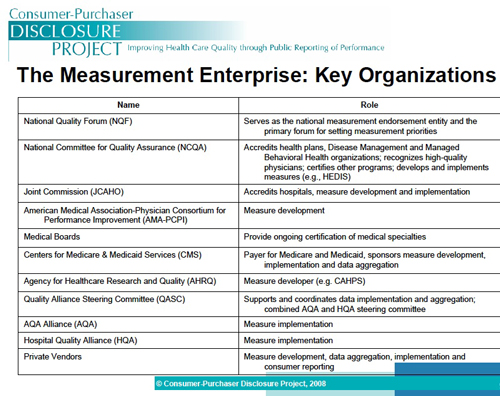

In today's post, we underscore three key points: First, the utility of a measure is dictated by the quality of the data being measured. A huge amount of human and financial resources go into constructing, implementing, analyzing, and making improvements based on results of measures. For a measure to achieve maximum utility, it must be carefully constructed to successfully meet its stated purpose as well as contain a high degree of reliability and validity. However, this is not enough; our second point is that the measure must also utilize data that is feasible to collect and analyze. Measures, therefore, are highly constrained by the actual data that is available. Administrative data sets may contain birth certificate information or patient discharge diagnoses (ICD-9 or ICD-10 codes) or procedure codes (diagnoses related group-DRG) data. Because this data was not designed or collected with quality improvement measures in mind, there may not be a natural fit between data and measure. Additionally, the types of administrative data available and the methods for collecting and ensuring the accuracy of that data can vary from hospital to hospital, state to state, making it difficult to extract the uniform data necessary to compare 'apples to apples.' Third, there are a variety of organizations that intersect in this landscape, each with their own set of organizational and professional interests regarding quality measure development, endorsement and implementation, adding another layer of complexity into this picture (see Figure 1 below).

Figure 1: The Measurement Enterprise: Key Organizations

Quality measures, when reported in a timely and accurate way, can exert a large influence over practice, even in the face of these complexities and challenges. What is measured comes to stand for overall 'quality,' however limited in focus any particular quality measure may be. This is why it's so important for maternity care advocates to understand where we are and where we need to go. There are many gaps in the measurement landscape for maternity care, for example. We currently lack maternity care measures that address the full episode of care, including prenatal visits and tests, coordination of care, intrapartum, and postpartum care[1]. Longitudinal aspects of ongoing morbidity are not captured, including postpartum re-admissions or follow up visits (of the woman and/or infant) via the Emergency Department or provider's office. A major goal of maternal quality measure developers is to identify and fill these gaps. However, both developers and consumers of measures need to evaluate how measures are constructed for maximum utility and benefit, be aware of instances in which measures are not feasible and appropriate, and consider how and when to use quality measures in concert with other quality improvement strategies, such as provider benchmarking and public reporting.

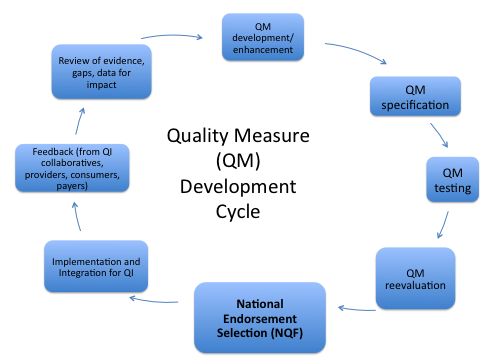

Figure 2: PCPI Cycle of Measure Development, adapted

Situating Measures in the overall Safety & Quality Landscape

The patient safety and quality improvement umbrella includes a wide range of activities: quality measures, quality indicators, reporting of adverse events, and risk management are broad categories of various QI activities. Many of these rely on labor-intensive medical chart review. However, chart review is not feasible for large scale comparisons across organizations and over time. In this blog post, we will focus on defining quality measures. It is important to note that data measurement is used in both quality measures and quality indicators. This can be confusing since sometimes there is overlap in the specific data being measured. For instance, the rate of primary cesarean section among low risk women can be both a quality indicator and a quality measure. The distinction between indicators and measures lies in how the data is used. Quality indicators are not direct measures of quality; they identify potential problem areas that need further review and investigation, usually through a peer review processes. In contrast, quality measures are designed to be both direct measures of quality and a way to address identified problem areas. Agencies that use quality indicators to identify potential problems include American Congress of Obstetricians and Gynecologists (ACOG), the Agency for Healthcare Research and Quality (AHRQ) Quality Indicators (QIs), and The Institute for HealthCare Improvement (IHI). In contrast, quality measures are interventions that create change by providing organizations with timely feedback and provide a vehicle for consumers to evaluate and choose healthcare providers, which in turn pressures organizations to change practices in ways that are reflected in quality measures (see Table 1).

TABLE 1: Quality Indicators and Quality Measures Compared

| Indicators identify potential problems in one of three areas |

Measures address identified problem areas where quality is lacking in one of five domains |

Prevention Quality Indicators- prevention of hospitalization for certain conditions, i.e. diabetesInpatient Quality Indicators-quality of care inside hospital, i.e.

- Mortality associated w/ certain conditions

- Utilization of certain procedures (i.e. c- section)

- Volume of certain procedures

Safety Indicators- provide information about complications and adverse events

|

Structure- reflect a provider or organization's capacity to give quality care (i.e. provider staffing ratios)Process- reflect generally accepted recommendations for practice (i.e. administering antenatal steroids for lung maturity)

Outcome- reflect impact of the health service on the health of a patient (i.e. birth injuries, neonatal mortality)

Access- access to services and disparity in receiving services

Patient Experience: derives from patient satisfaction surveys

|

Key Considerations for Quality Measures

When constructing measures, there are additional key considerations that impact feasibility of data collection and also the potential impact of a measure. The first is Level of Measurement- which level of care will the collected data reflect? Measures provide information about quality of care at the level of the provider, medical group, hospital, or population (such as a state's overall performance). At the micro-level, data may be harder to collect, but the information may be more specific and thus more useful for bringing about practice change. Increasingly, there is pressure to move towards measures of care of individual providers, but this level of measurement can be controversial in maternity care because care is typically conceptualized in modern QI theories as being a team effort comprised by individual contributions of medical providers and staff members, and during the course of a labor, many different clinicians are likely to have made consequential process and outcome decisions, yet only the physician whose name is on the birth certificate would be associated with the ultimate outcomes of the case. The second key consideration is level of release of the data- who will have access to the data? Data may be collected and publicly reported, it may be collected by states or other organizations and then made available to individual hospitals for QI purposes, or it may be used for internal benchmarking purposes only and never leave the walls of the hospital where it is collected.

Defining and Developing Quality Measures

Although early measures typically reported outcomes of hospital or provider care, more recently, types of measures have expanded to include those that assess process and patient experience as well as structural capacity as shown in Table 1 above. Many organizations have specified criteria for assessing quality measures, drawing heavily on the definition of quality outlined by the Institute of Medicine in its 1999 report, To Err is Human, as the Six Aims of Quality. Here, quality refers to clinical care that is uniformly:

Safe: Patients not harmed by care intended to help them

Effective: Based on evidence and produces better outcomes than alternatives

Patient-Centered: Focuses on patient's experiences, needs and preferences

Timely: Provides seamless access to care without delays

Efficient: Avoids waste including unnecessary procedures and re-work

Equitable: Assures fair distribution of resources based on patients' needs

AHRQ and NQF have both specified criteria for developing and evaluating quality measures. The process whereby a measure is constructed and evaluated is very technical, dependent on an intimate familiarity with the data points that can be used by hospitals to construct these measures. The desirable attributes of a measure can be grouped according to three key broad conceptual areas within which narrower categories provide more detail. These three areas are (1) importance of a measure, (2) scientific soundness of a measure, and (3) feasibility of a measure. (See Table 2 below.)

TABLE 2: AHRQ Quality Measures Desirable Attributes

| Importance of the Measure - Five Aspects |

Relevance to stakeholders |

Health importance |

Applicable to measuring the equitable distribution of health care |

Potential for improvement |

Susceptibility to being influenced by the health care system |

| Scientific Soundness: Clinical Logic |

Explicitness of evidence

|

Strength of evidence |

| Scientific Soundness: Measure Properties |

Reliability - the results should be reproducible and reflect results of action when implemented over time; reliability testing should be documented. |

Validity - the measure is associated with what it purports to measure; |

Allowance for patient/consumer factors as required- the measure allows for stratification or case-mix adjustment. |

Comprehensible- the results should be understandable for the user who will be acting on the data. |

| Feasibility |

Explicit specification of numerator and denominator - statements of the requirements for data collection should be understandable and implementable. |

Data availability - the data source that is needed to implement the measure should be available, accessible, and timely. The burden of measurement should also be considered, where the costs of abstracting and collecting data are justified by the potential for improvement in care. |

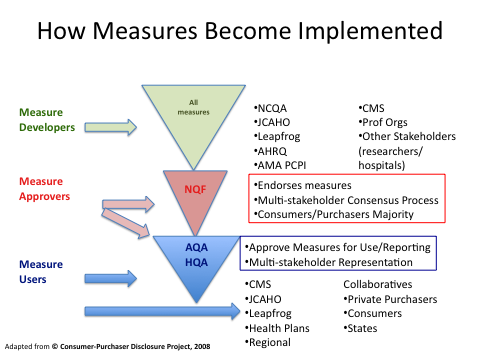

How Measures Become Endorsed

In the midst of the accreditation, standardization and quality movement outlined in our previous post, there were numerous organizations vying to define and promote particular quality measures. In 1999, pressures from payers, consumers and providers coalesced into the creation of a single agency, the National Quality Forum (NQF), to consider, evaluate and endorse any particular quality measure submitted to it for consideration. NQF also provides a set of criteria used for evaluating measures.NQF is solely responsible for endorsing measures- they are not involved in developing, collecting or evaluating measures. There are clinically-specific divisions within NQF, including perinatal services. Historically, most of the perinatal measures have been focused on quality measures for neonatal outcomes, both in general and in the NICU. However, in 2008, the NQF released National Voluntary Consensus Standards for Perinatal Care, which included 17 measures, 9 reflecting obstetrics care and outcomes, of which two are of interest here - the NTSV (nulliparous term singleton vertex) and the elective delivery(ED)<39 weeks as well as eight other perinatal quality measures, again, mostly related to neonatal care issues.

Once the NQF endorses quality measures, organizations can then select from among them for their own purposes. These organizations include the Joint Commission, the leading hospital accreditation organization, which shortly after NQF endorsed their latest set, included five of them into their revised perinatal core measures set, which hospitals could begin reporting on in April 2010. It is important to note that to receive JC accreditation, hospitals have to report on a certain number of core measure sets, but they have the choice to select which ones. Hospitals that have a lot of births may or may not elect to report on the perinatal core measures.

Individual states can utilize the NQF measures as well. And, as noted above, the ACA 2010 mandated that ADULT measures of quality must be included in evaluations of Medicaid services. So now states are looking at these issues. A notable example here has been Ohio. While their efforts predated the ACA, their experience has been highly successful by all accounts, in reducing the number of elective deliveries prior to 39 completed weeks gestation.

Finally, organizations, such as Leapfrog, a consortium for purchasing health care that represents Fortune 500 companies are able to utilize the NQF endorsed measures. Leapfrog administers a voluntary survey among hospitals, which focuses on practices that have been identified as promoting patient safety. Survey results are made publicly available to influence decisions made by large buyers of health care. Currently, the survey includes process questions (borrowed from the NQF endorsed perinatal standards) that relate to both high-risk and normal deliveries.

Public Reporting of Quality Measures

As noted above, quality measures have been developed for several years using data from Medicare, a federally funded health care program, which has long required hospitals and providers to report these to the public, insurance companies and the government. Thus data from these ongoing efforts can be found online through the HospitalCompare.org project - some states have their own version, in California, it's CalHospitalCompare.org. Organizations like the Dartmouth Atlas have conducted many useful studies using this data, showing the extent of regional variation in certain procedures and concluding this variation is based on provider, not patient characteristics or practices. The scores of individual hospitals on TJCs quality measure sets are available on the Joint Commission's free public quality report known as Quality Check®, which is available at (http://www.qualitycheck.org). Users can view results that are organization-specific online or download results for free. For each set of measures, the site reports composite scores for the individual hospital and national and state data for comparison.

PART 3: Maternal Quality Care - How do we measure AND achieve it?

In Part 3 of our primer, we will review the current NQF perinatal measures and discuss The Joint Commission Perinatal Core Measure Set, using a case study to describe how these measures are being used by various organizations and/or states, and discuss their limitations as well as their potential. We will conclude with suggestions on how maternity care advocates can engage withmaternal quality improvement efforts on national and local levels.

[Editor's note: to read Part Three of this series - which is segmented into five sections, spanning 12/26/11-12/30/11, go here.]

Posted by: Christine Morton, PhD (CMQCC) and Kathleen Pine, (University of California, Irvine)

References:

Elliott Main. Quality Measurement in Maternity Services: Staying One Step Ahead. November, 2010. Childbirth Connection. Available at: http://transform.childbirthconnection.org/2011/03/performancewebinar/

R. Rima Jolivet and Elliott Main. Quality Measurement in Maternity Services: Staying One Step Ahead. November, 2010. Childbirth Connection. Available at: http://transform.childbirthconnection.org/2011/03/performancewebinar/

Tags

Childbirth Connection Maternal Infant Care Christine Morton American Medical Association Institute For Healthcare Improvement JCAHO Kathleen Pine Maternal Quality Improvement Medicaid And Maternity Care TJC NQFX PPACA